Autonomous Space Robotics Lab

UTIAS Multi-Robot Cooperative Localization and Mapping Dataset

[Summary] [Details] [File Format] [Usage] [Downloads] [Tools] [Credits]

Summary

This 2d indoor dataset collection consists of 9 individual datasets. Each dataset contains odometry and (range and bearing) measurement data from 5 robots, as well as accurate groundtruth data for all robot poses and (15) landmark positions. The dataset is intended for studying the problems of cooperative localization (with only a team robots), cooperative localization with a known map, and cooperative simultaneous localization and mapping (SLAM).

Data Collection Details

In each dataset, robots move to random waypoints in a 15m×8m indoor space while logging odometry data, and range-bearing observations to landmarks and other robots. [Video example of the dataset collection process]

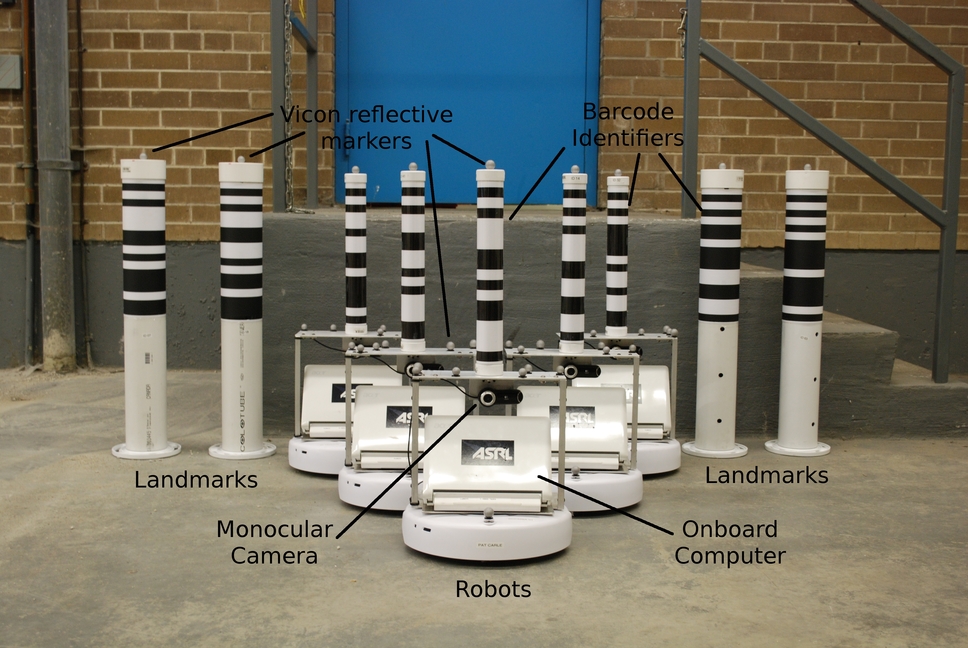

RobotsA fleet of 5 robots identical in construction and built from the iRobot Create (two-wheel differential drive) platform are used in producing the datasets. Each robot is equipped with a laptop computer which runs Player, and a monocular camera for sensing. Landmarks15 cylindrical tubes with the same dimensions are used as landmarks. |

|

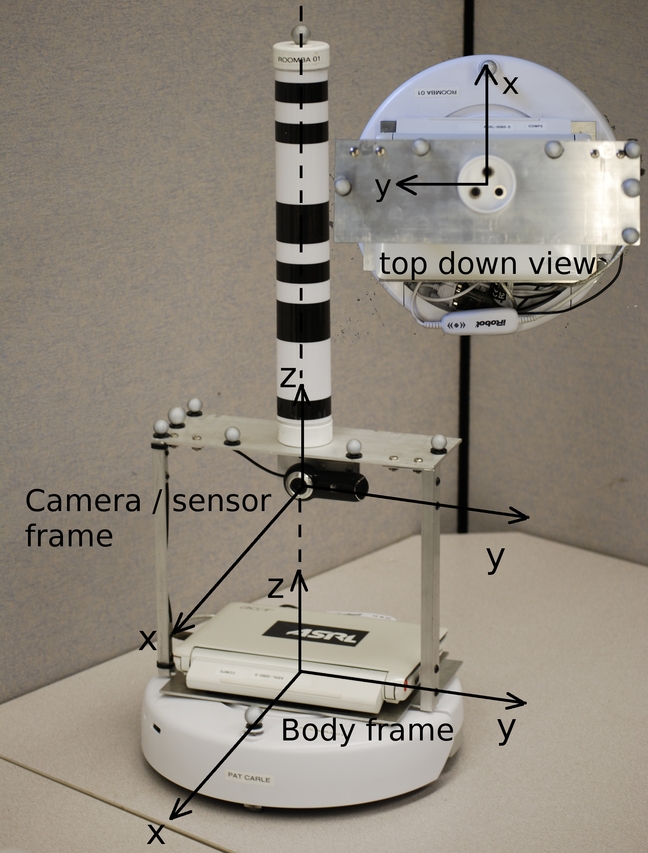

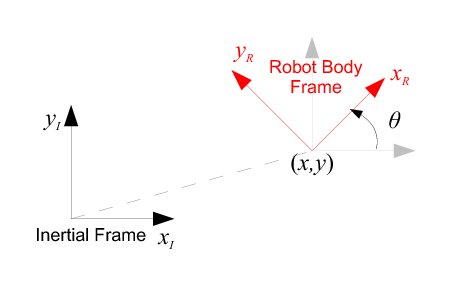

OdometryForward velocity (along the x-axis of the robot body frame) commands, v, and angular velocity commands (rotation about the z-axis of the robot body frame using right hand rule), ω, are logged at an average of 67Hz as odometry data.MeasurementsEach robot and landmark has a unique identification number encoded as a barcode (with known dimensions). Images captured by the camera on each robot (at a resolution of 960×720) are rectified and processed to detect the barcodes. The encoded identification number as well as the range and bearing to each barcode is then extracted. The camera on each robot is conveniently placed to align with the robot body frame.

|

|

OcclusionsIn creating dataset 9, barriers were placed in the environment to occlude the robots' views. This reduced the number of measurements that the robots obtained. The robots also had to avoid these barriers while driving in the workspace. [Video example] |

|

GroundtruthA 10-camera Vicon motion capture system provides the groundtruth pose (x,y,θ) for each robot and groundtruth position (x,y) for each landmark at 100Hz with accuracy on the order of 1×10-3m. The reference frame used by Vicon serves also as the inertial reference frame in the datasets. |

|

Time Synchronization

The Network Time Protocol (NTP) daemon is used to synchronize the clocks between the computers on each robot and the groundtruth data logging computer. Average timing error is on the order of 1×10-3s.

File Format and Description

Each dataset has 17 text files:

- 1 for identifying the identification number encoded by the barcode on each robot and landmark

- 1 for all landmark groundtruth

- 5 for each robot's groundtruth

- 5 for each robot's odometry, and

- 5 for each robot's measurements

Barcode File

Filename: Barcodes.dat

This file specifies the barcode number associated with each subject number in the following format:

| subject # | barcode # |

Landmark Groundtruth File

Filename: Landmark_Groundtruth.dat

The file contains the mean groundtruth position (x,y) of all (static) landmarks as well as the standard deviations of the groundtruth measurements in the following format:

| subject # | x [m] | y [m] | x std-dev [m] | y std-dev [m] |

Robot Groundtruth File

Filename: Robot[Subject #]_Groundtruth.dat

This file contains the time-stamped groundtruth poses (x,y,θ) of robot [Subject #] in the following format:

| time [s] | x [m] | y [m] | θ [rad] |

Robot Odometry File

Filename: Robot[Subject #]_Odometry.dat

This file contains the time-stamped velocity commands (v,ω) of robot [Subject #] in the following format:

| time [s] | v [m] | ω [rad] |

Robot Measurement Files

Filename: Robot[Subject #]_Measurement.dat

This file contains the time-stamped range and bearing measurements (r,φ) of robot [Subject #] in the following format:

| time [s] | measured subject # | r [m] | φ [rad] |

Images

Filename: [Timestamp]_camera_00.jpg

Images captured by each robot's camera is available for dataset 7. The images have already been undistorted. The focal lengths of each robot's camera is as follows:

| fx [pixels] | fy [pixels] | |

| Robot 1 | 795.06275 | 799.35868 |

| Robot 2 | 793.80267 | 796.90655 |

| Robot 3 | 797.14344 | 801.16701 |

| Robot 4 | 794.03435 | 797.41190 |

| Robot 5 | 794.45610 | 798.81932 |

Data Usage

Cooperative Localization with Robots Only

All measurements associated with landmarks (subject numbers 6-20) should be ignored. Landmark groundtruth data can also be ignored. Robot groundtruth data should be used to evaluate localization performance.

Cooperative Localization with a Known Map

Landmark groundtruth data should be used to construct the map. Robot groundtruth data should be used to evaluate localization performance.

Cooperative SLAM

Use all data. Robot and landmark groundtruth data should be used to evaluate localization performance. [Example]

Reducing the Number of Robots or Landmarks

Ignore all data associated with subject numbers that correspond with certain robots and landmarks.Limiting Measurement Range

Ignore measurements with range (r) above a certain threshold.Limiting Communication Range

Use groundtruth data to determine if and when robots are allowed to exchange information for state estimation. [Example]Dataset Files

Each dataset can be downloaded individually as a zip archive from ftp://asrl3.utias.utoronto.ca/MRCLAM/ . Videos taken using cameras overlooking the datasect collection area, as well as robot images from dataset 7 are also available for download.

Internet Explorer users may need to manually add "" before the ftp address.

Important note: The dataset provides ftp download links. Some browsers no longer enable ftp links by default and you need to change the settings if you want to download using the browser. Using the wget command is an alternative to avoid the browser.

Dataset 1 | compressed size: 6MB, extracted size: 41MB | Dataset Duration: 1500s

video

video

Dataset 2 | compressed size: 7MB, extracted size: 50MB | Dataset Duration: 1900s

video

video

Dataset 3 | compressed size: 8MB, extracted size: 56MB | Dataset Duration: 1800s

video

video

Dataset 4 | compressed size: 7MB, extracted size: 42MB | Dataset Duration: 1400s

video

video

Dataset 5 | compressed size: 10MB, extracted size: 68MB | Dataset Duration: 2300s

video

video

Dataset 6 | compressed size: 4MB, extracted size: 26MB | Dataset Duration: 900s

video

video

Dataset 7 | compressed size: 4MB, extracted size: 26MB | Dataset Duration: 900s

video

video

Dataset 7 Images [Sample Image] | size: 22GB

Dataset 8 | compressed size: 17MB, extracted size: 116MB | Dataset Duration: 4400s

video

video

Dataset 9 | compressed size: 11MB, extracted size: 58MB | Dataset Duration: 2100s

Barriers were used in the workspace for this dataset. video

Access Tools

The following tools are provided for the convenience of dataset users.

Matlab Scripts

- loadMRCLAMdataSet.m Run this script in the main directory of a dataset to parse the text files into Matlab variables.

- sampleMRCLAMdataSet.m Run this script after loadMRCLAMdataSet to sample the data at fixed time intervals. The default sampling frequency is 50Hz.

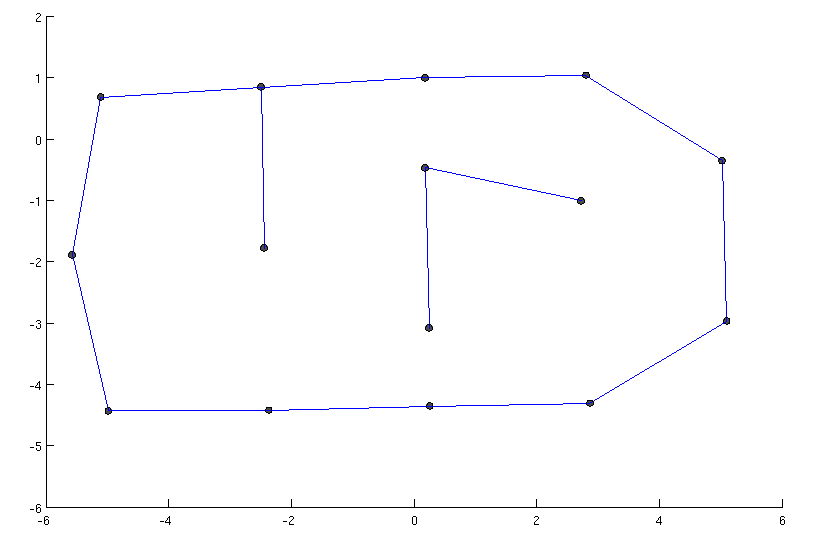

- animateMRCLAMdataSet.m Run this script after sampleMRCLAMdataSet to see an animation of the groundtruth data.

Credits

This dataset was the work of Keith Leung, Yoni Halpern, Tim Barfoot, and Hugh Liu.

If you use the data provided by this website in your own work, please use the following citation:

Leung K Y K, Halpern Y, Barfoot T D, and Liu H H T. “The UTIAS Multi-Robot Cooperative Localization and Mapping Dataset”. International Journal of Robotics Research, 30(8):969–974, July 2011.