Datasets

The following datasets are intended for research and educational purposes.

Feel free to use them and cite them where possible.

UTIAS Long-Term Localization and Mapping Dataset

The UTIAS In the Dark and UTIAS Multiseason datasets are a collection of stereo imagery collected on a Clearpath Grizzly Robotic Utility Vehicle. They feature a large range of natural appearance change due to both lighting and seasonal conditions. In the Dark consists of 39 path traversals of a 260 metre path driven approximately once per hour for 30 consecutive hours. Multiseason consists of 136 runs on a separate off-road path collected continuously between January and May. Both datasets were collected using Visual Teach & Repeat's proven Multi-Experience Localization to ensure the robot accurately drove the same path each run. The data will be of interest to researchers studying localization and mapping under appearance change. Python tools have been provided to facilitate its use.

Motion-Distorted Lidar Simulation Dataset

This dataset contains sequences of simulated lidar data in urban driving scenarios. The motion-distorted simulation contains lidar frames (one revolution, 360 degree field-of-view) of 128000 measurements at a frequency of 10 Hz. Motion distortion refers to the “rolling-shutter” effect of commonly used 3D lidar sensors. Ground truth poses for the trajectories of all vehicles are also given. Each lidar measurement also contains a unique object ID value, corresponding to the object that it made contact with (only vehicles).

UTIAS Multi-Robot Cooperative Localization and Mapping Dataset

This 2D indoor dataset collection consists of 8 individual datasets. Each dataset contains odometry and (range and bearing) measurement data from 5 robots, as well as accurate groundtruth data for all robot poses and (15) landmark positions. The dataset is intended for studying the problems of cooperative localization (with only a team robots), cooperative localization with a known map, and cooperative simultaneous localization and mapping (SLAM).

Leung K Y K, Halpern Y, Barfoot T D, and Liu H H T. “The UTIAS Multi-Robot Cooperative Localization and Mapping Dataset”. International Journal of Robotics Research, 30(8):969–974, July 2011. doi:10.1177/0278364911398404. (pdf)

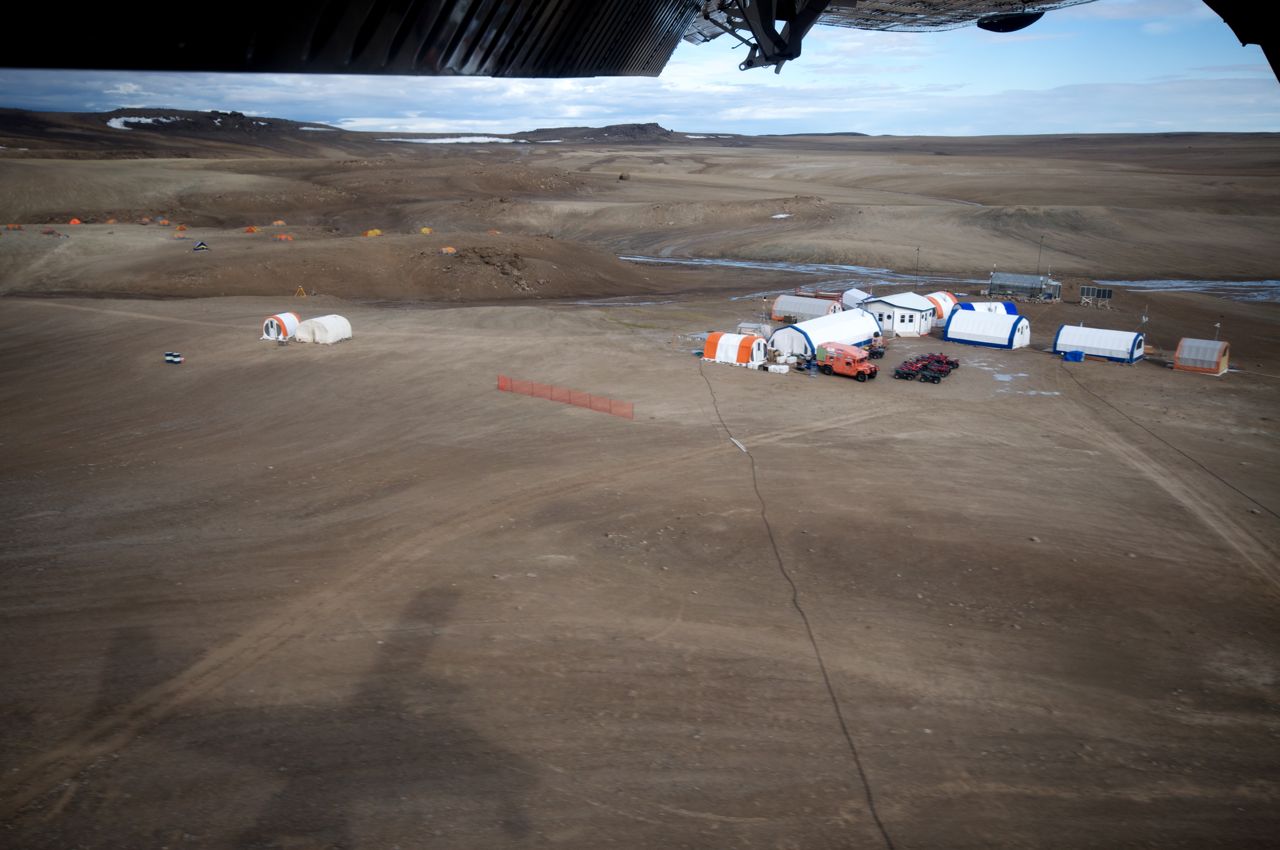

Devon Island Rover Navigation Dataset

The Devon Island Rover Navigation Dataset is a collection of data gathered at a Mars/Moon analogue site on Devon Island, Nunavut (75°22'N and 89°41'W) suitable for robotics research. The dataset is split into two parts. The first part contains rover traverse data---stereo imagery, sun vectors, inclinometer data, and ground-truth position information from a differential global positioning system (DGPS) collected over a ten-kilometer traverse. The second part contains long-range localization data---3D laser range scans, image panoramas, digital elevation maps, and GPS data useful for global position estimation. All images are available in common formats and other data is presented in human-readable text files. To facilitate use of the data, Matlab parsing scripts are included.

Furgale P T, Carle P, Enright J, and Barfoot T D. “The Devon Island Rover Navigation Dataset”. International Journal of Robotics Research, 31(6):707-713. doi:10.1177/0278364911433135. (pdf)

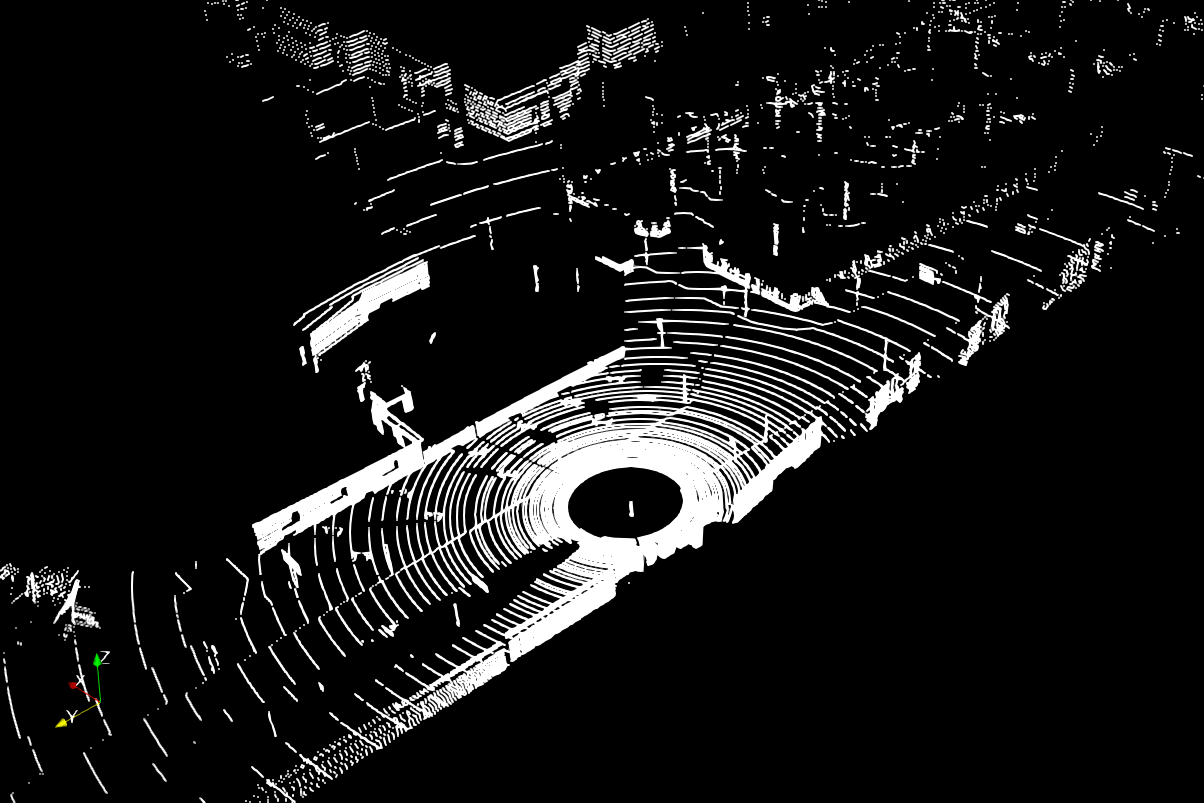

Canadian Planetary Emulation Terrain 3D Mapping Dataset

The Canadian Planetary Emulation Terrain 3D Mapping Dataset is a collection of three-dimensional laser scans gathered at two unique planetary analogue rover test facilities in Canada. These test facilities offer emulated planetary terrain in controlled environments, as well as at manageable scales for algorithmic development. This dataset is subdivided into four individual subsets, gathered using panning laser rangefinders mounted on mobile rover platforms. This data should be of interest to field robotics researchers developing algorithms for laser-based Simultaneous Localization And Mapping (SLAM) of three-dimensional, unstructured, natural terrain. All of the data are presented in human-readable text files, and are accompanied by Matlab parsing scripts to facilitate use thereof.

Tong C, Gingras D, Larose, K, Barfoot T D, and Dupuis E. “The Canadian Planetary Emulation Terrain 3D Mapping Dataset”. International Journal of Robotics Research (IJRR), 2013. doi:10.1177/0278364913478897. (pdf)

Gravel Pit Lidar-Intensity Imagery Dataset

The Gravel Pit Lidar Intensity Imagery Dataset is a collection of 77,754 high-framerate laser range and intensity images gathered at a suitable planetary analogue environment in Sudbury, Ontario, Canada. The data were collected during a visual teach and repeat experiment in which a 1.1km route was taught and then autonomously re-traversed (i.e., the robot drove in its own tracks) every 2-3 hours for 25 hours. The dataset is subdivided into the individual 1.1km traversals of the same route, at varying times of day (ranging from full sunlight to full darkness). This data should be of interest to researchers who develop algorithms for visual odometry, simultaneous localization and mapping (SLAM) or place recognition in three-dimensional, unstructured and natural environments.

Anderson S, McManus C, Dong H, Beerepoot E, and Barfoot T D. “The Gravel Pit Lidar-Intensity Imagery Dataset”. University of Toronto Technical Report ASRL-2012-ABL001. (pdf)